Tech

Google’s AI Overview isn’t dumb, it’s the nonsensical questions, says Search head – Times of India

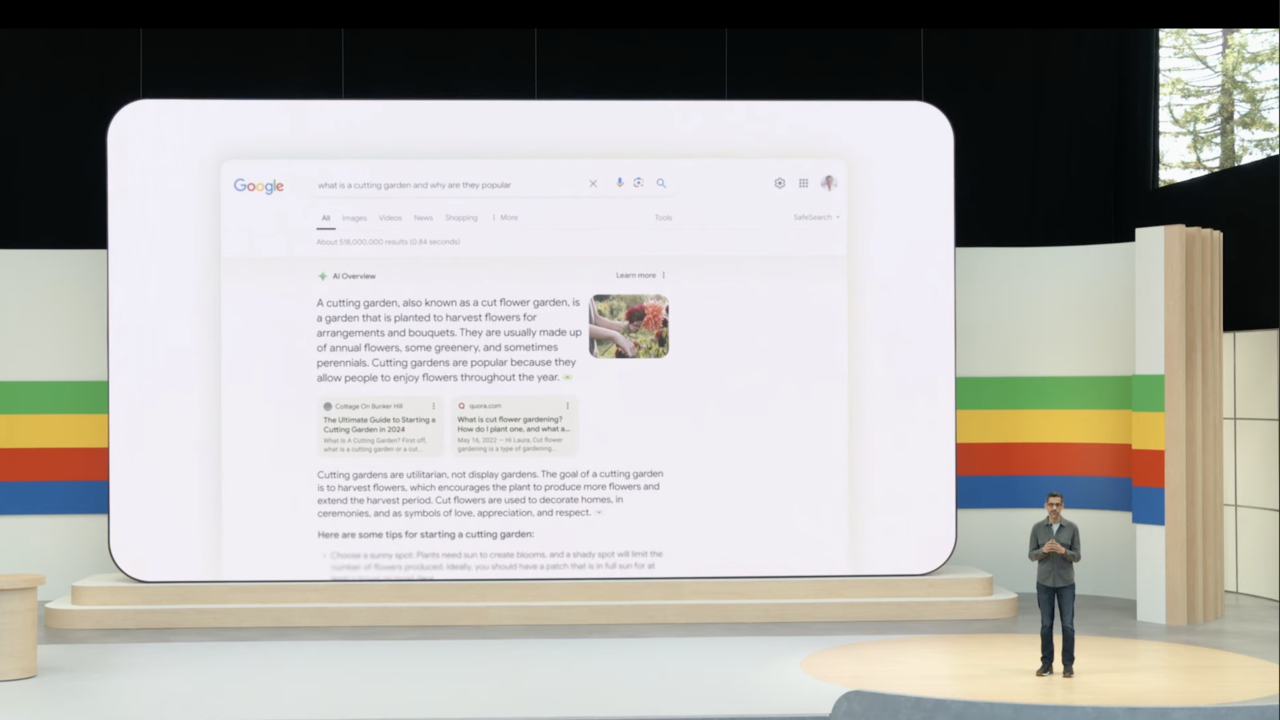

Google has made changes to its new AI Overview search feature after it gave some strange and inaccurate results, like telling people to put glue on pizza and eat rocks. The company has put new rules in place to try to stop its AI from giving bad information.

Last week, Google released AI Overviews to everyone in the United States. The new feature uses artificial intelligence to summarise information from websites and give direct answers to search questions. However, many people quickly found examples of the AI giving very odd and incorrect responses.

In one case, when someone searched “How many rocks should I eat?”, the AI Overview said that eating rocks could provide health benefits, even though this is not true. In another example, the AI told people to use glue to make cheese stick to pizza better. And to query, the AI told

Liz Reid, the head of Google Search, wrote a blog post on Thursday explaining what went wrong. She said that for the rocks question, hardly anyone had ever searched that before. One of the only webpages on the topic was a joke article, but the AI thought it was serious.

“Prior to these screenshots going viral, practically no one asked Google that question,” Reid wrote. “There isn’t much web content that seriously contemplates that question, either. This is what is often called a ‘data void’ or ‘information gap,’ where there’s a limited amount of high quality content about a topic.”

Reid said the pizza cheese glue response came from a discussion forum post. She explained that while forums often have helpful firsthand information, they can also contain bad advice that the AI picked up on.

She defended Google, saying that some of the worst supposed examples spreading on social media, like the AI allegedly saying pregnant women could smoke, were fake screenshots, which they actually were.

Google is making changes to Search AI Overview, so it doesn’t tell people to eat stone or glue anymore

The search executive said Google’s AI overviews are designed differently than chatbots, as they are integrated with the company’s core web ranking systems to surface relevant, high-quality results. Because of this, she argued, the AI typically does not “hallucinate” information like other large language models, and has an accuracy rate on par with Google’s featured snippets.

Reid acknowledged that “some odd, inaccurate or unhelpful AI Overviews certainly did show up,” highlighting areas for improvement. So, Google has now made over a dozen changes to try to fix these issues.

Google has improved its AI’s ability to recognize silly, or as Reid says “nonsensical” questions that it shouldn’t answer. It also made the AI rely less on forums and social media posts that could lead it astray.

For serious topics like health and news, Google already had stricter rules about when the AI could give direct answers. Now it has added even more limits, especially for health-related searches.

Moving forward, Google says it will keep a close eye on the AI Overviews and quickly fix any problems. “We’ll keep improving when and how we show AI Overviews and strengthening our protections, including for edge cases,” Reid wrote. “We’re very grateful for the ongoing feedback.”

Last week, Google released AI Overviews to everyone in the United States. The new feature uses artificial intelligence to summarise information from websites and give direct answers to search questions. However, many people quickly found examples of the AI giving very odd and incorrect responses.

In one case, when someone searched “How many rocks should I eat?”, the AI Overview said that eating rocks could provide health benefits, even though this is not true. In another example, the AI told people to use glue to make cheese stick to pizza better. And to query, the AI told

Liz Reid, the head of Google Search, wrote a blog post on Thursday explaining what went wrong. She said that for the rocks question, hardly anyone had ever searched that before. One of the only webpages on the topic was a joke article, but the AI thought it was serious.

“Prior to these screenshots going viral, practically no one asked Google that question,” Reid wrote. “There isn’t much web content that seriously contemplates that question, either. This is what is often called a ‘data void’ or ‘information gap,’ where there’s a limited amount of high quality content about a topic.”

Reid said the pizza cheese glue response came from a discussion forum post. She explained that while forums often have helpful firsthand information, they can also contain bad advice that the AI picked up on.

She defended Google, saying that some of the worst supposed examples spreading on social media, like the AI allegedly saying pregnant women could smoke, were fake screenshots, which they actually were.

Google is making changes to Search AI Overview, so it doesn’t tell people to eat stone or glue anymore

The search executive said Google’s AI overviews are designed differently than chatbots, as they are integrated with the company’s core web ranking systems to surface relevant, high-quality results. Because of this, she argued, the AI typically does not “hallucinate” information like other large language models, and has an accuracy rate on par with Google’s featured snippets.

Reid acknowledged that “some odd, inaccurate or unhelpful AI Overviews certainly did show up,” highlighting areas for improvement. So, Google has now made over a dozen changes to try to fix these issues.

Google has improved its AI’s ability to recognize silly, or as Reid says “nonsensical” questions that it shouldn’t answer. It also made the AI rely less on forums and social media posts that could lead it astray.

For serious topics like health and news, Google already had stricter rules about when the AI could give direct answers. Now it has added even more limits, especially for health-related searches.

Moving forward, Google says it will keep a close eye on the AI Overviews and quickly fix any problems. “We’ll keep improving when and how we show AI Overviews and strengthening our protections, including for edge cases,” Reid wrote. “We’re very grateful for the ongoing feedback.”

Continue Reading

)